On July 18, 2024, at the Genetic and Evolutionary Computation Conference (GECCO 2024) held in Melbourne, Australia, Professor Ran Cheng’s research group was honored with the Best Paper Award. The paper, titled “Tensorized NeuroEvolution of Augmenting Topologies for GPU Acceleration,” lists postgraduate student Lishuang Wang as the first author, with undergraduate students Mengfei Zhao and Enyu Liu as the second and third authors, postgraduate student Kebin Sun as the fourth author, and Professor Ran Cheng as the corresponding author.

The Genetic and Evolutionary Computation Conference (GECCO), organized by ACM SIGEVO, is one of the most prestigious and influential international conferences in the field of computational intelligence. GECCO brings together the world’s leading researchers and scholars each year to exchange and showcase the latest research achievements in evolutionary computation. Since its inception in 1999, GECCO has become a flagship conference in the field of evolutionary computation, recognized internationally for its high academic standards and significant impact.

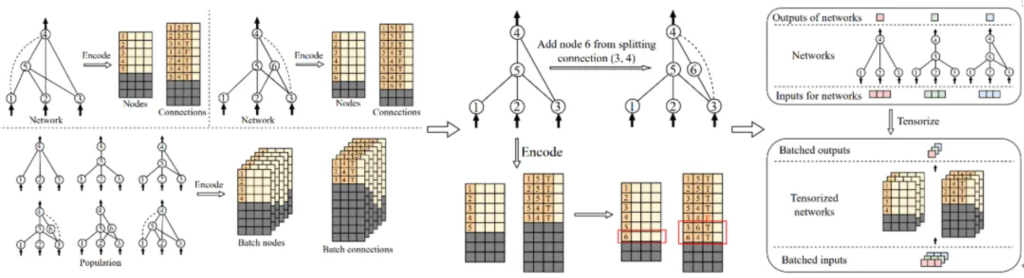

The NeuroEvolution of Augmenting Topologies (NEAT) algorithm, proposed by Kenneth Stanley and Risto Miikkulainen in 2002, has had a profound impact on areas such as artificial intelligence, robotic control, and autonomous driving. However, the traditional NEAT algorithm faces computational efficiency challenges when applied to large-scale problems. To address this challenge, Professor Ran Cheng’s team developed the TensorNEAT algorithm library. By employing tensorization techniques, NEAT and its derivative algorithms (including CPPN and HyperNEAT) now fully support GPU acceleration.

Tensorization, a technique that transforms data structures and operations into tensor formats, is particularly well-suited for efficient parallel computation on GPUs. TensorNEAT converts the diverse network topologies of the NEAT algorithm into tensor forms, allowing parallel execution of key operations across the entire population, thereby greatly improving computational efficiency. Experimental results demonstrate that TensorNEAT delivers over 500 times speed improvement compared to the traditional NEAT algorithm, across various tasks and hardware configurations. TensorNEAT is now open-sourced on GitHub: https://github.com/EMI-Group/tensorneat.