Shihua Huang, Zhichao Lu, Ran Cheng*, Cheng He

Abstract:

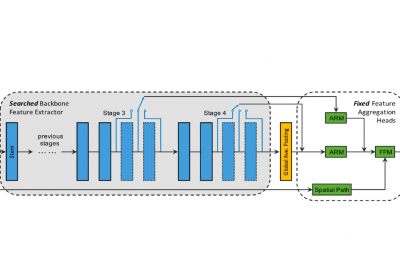

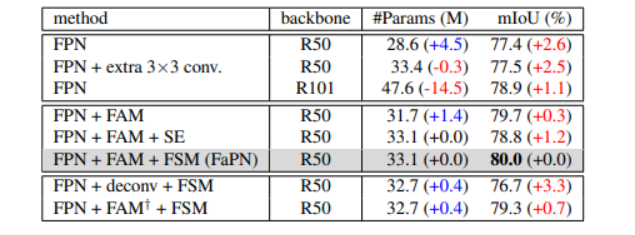

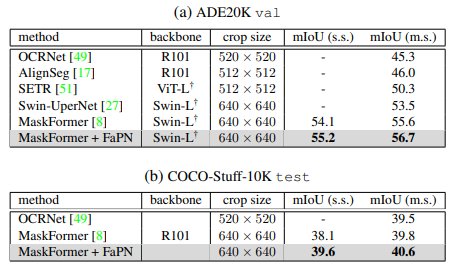

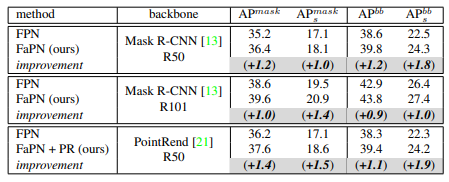

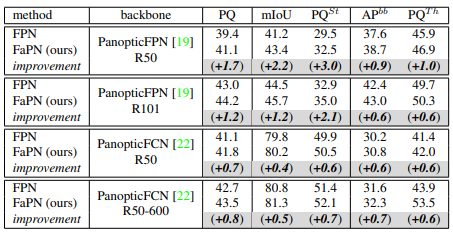

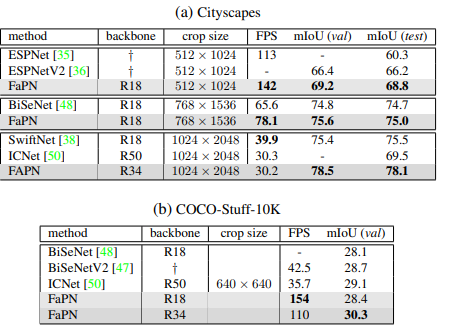

Recent advancements in deep neural networks have made remarkable leap-forwards in dense image prediction. However, the issue of feature alignment remains as neglected by most existing approaches for simplicity. Direct pixel addition between upsampled and local features leads to feature maps with misaligned contexts that, in turn, translate to mis-classifications in prediction, especially on object boundaries. In this paper, we propose a feature alignment module that learns transformation offsets of pixels to contextually align upsampled higher-level features; and another feature selection module to emphasize the lower-level features with rich spatial details. We then integrate these two modules in a top-down pyramidal architecture and present the Feature-aligned Pyramid Network (FaPN). Extensive experimental evaluations on four dense prediction tasks and four datasets have demonstrated the efficacy of FaPN, yielding an overall improvement of 1.2 – 2.6 points in AP / mIoU over FPN when paired with Faster / Mask R-CNN. In particular, our FaPN achieves the state-of-the-art of 56.7% mIoU on ADE20K when integrated within Mask-Former. [Source Code]

Results

Ablation Study

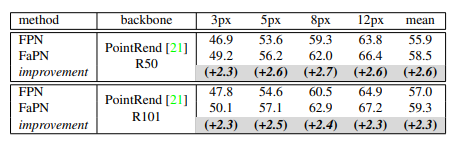

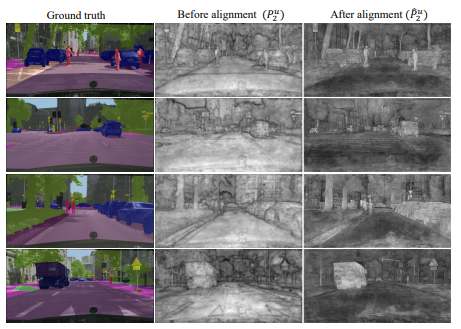

Boundary Prediction Analysis

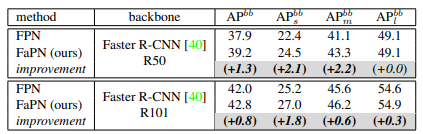

Object Detection

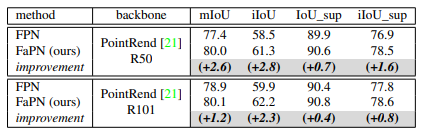

Semantic Segmentation

Instance Segmentation

Panoptic Segmentation

Real-time Semantic Segmentation

Citation

@article{huang2021fapn,

title={FaPN: Feature-aligned Pyramid Network for Dense Image Prediction},

author={Huang, Shihua and Lu, Zhichao and Cheng, Ran and He, Cheng},

journal={IEEE ICCV},

year={2021}

}Acknowledgments

This work was supported by the National Natural Science Foundation of China (Grant No. 61903178, 61906081, and U20A20306) and the Program for Guangdong Introducing Innovative and Enterpreneurial Teams (Grant No. 2017ZT07X386).

![[ICCV 2021] FaPN: Feature-aligned Pyramid Network for Dense Image Prediction](https://www.emigroup.tech/wp-content/uploads/2021/08/fapn-1-567x420.png)